🌏 #1 | “ChatGPT for X” is coming fast, driven by rapid price reductions in OpenAI’s platform service

💡 From ChatGPT to "ChatGPT for X"

When OpenAI launched ChatGPT last November, it kicked off the generative AI era. Big tech and startups are driving fast to integrate generative AI into their products and to develop AI-native products.

“ChatGPT for X” applications will apply the ability to talk to your computer that new AI models enable to specific use cases, from summarising documents to fitness coaching.

💰 The cost of building AI applications

To develop these applications, most companies won’t be training their own AI models. Training an AI model comparable to GPT-3 is estimated to cost around $5 million on the low end for computing power alone.

Instead, most companies will build their applications using platforms (API services) provided by companies like OpenAI and Anthropic.

These platforms enable software engineers to integrate the power of existing AI models into the applications they’re building. They have three key features:

1️⃣ Plug and play: Getting started requires no more than several lines of code.

2️⃣ Prompt in, completion out: Applications are programmed to send prompt text to an AI platform and receive completion text in response.

3️⃣ Pay as you go: Pricing is based on the quantity of prompt and completion text processed. Usage is measured in “tokens”, with 1K tokens equal to about 750 words.

📉 OpenAI is driving down AI costs

The Web 2.0 era has been driven by the near-zero marginal costs of scaling traditional websites to additional users. In the generative AI era, things will be different. Generative AI models are resource intensive and meaningful marginal costs are reflected in usage-based platform pricing.

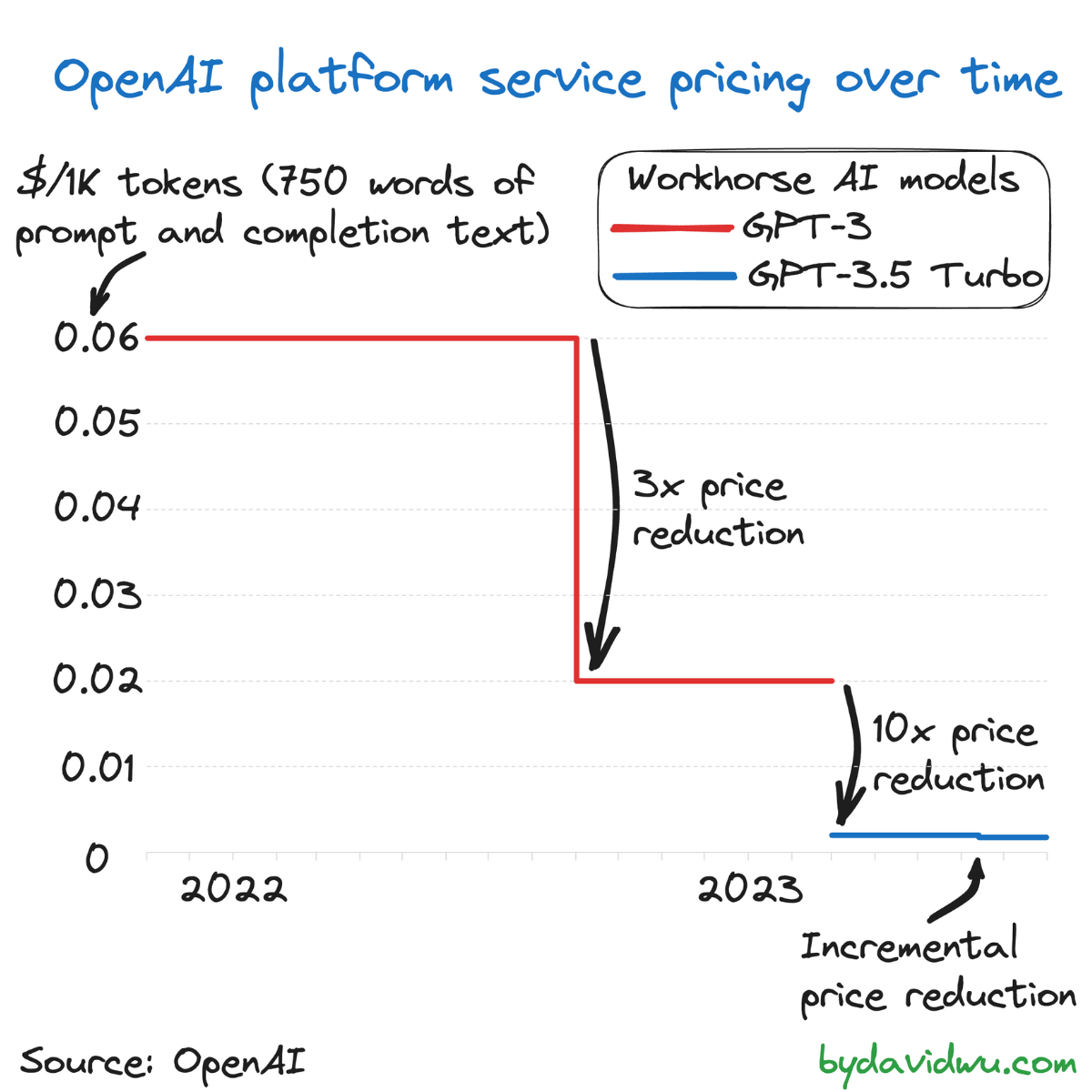

Therefore, the downwards trajectory of OpenAI’s platform pricing since late 2021 is remarkable:

1️⃣ In November 2021, OpenAI removed the waitlist for its GPT-3 platform offering, with pricing at $0.06 / 1K tokens. In September 2022, OpenAI passed on a 3x price reduction to its platform users, reducing the price to $0.02 / 1K tokens.

2️⃣ In March 2023, OpenAI released GPT-3.5 Turbo on its platform, with prices down 10x compared to pricing for GPT-3 at $0.002 / 1K tokens.

3️⃣ This was followed by an incremental reduction in June 2023 to today's price of $0.00175 / 1K tokens — a 97% price reduction compared to November 2021.

✨ Why this matters

To see why this is a big deal, imagine your favourite ChatGPT for X startup idea becomes a reality.

Let’s assume that there are 5M users and that on average a user’s interactions with the application leads to 10K tokens (7.5K words) of prompt and completion text being processed each week.

Using GPT-3 in late 2022, platform costs would total $1M / week. In comparison, using GPT-3.5 Turbo today, this cost would be $87.5K / week. That's a make-or-break difference.

✨ If you're a software engineer and enjoyed this post, then you might also enjoy my book, Fullstack GPT, aimed at helping developers quickly get started with the basics of building fullstack AI web apps with the OpenAI API, Next.js and Tailwind CSS.

📨 I'm writing fortnightly about tech and economics as an economics and policy wonk turned software engineer. Join my newsletter to receive new posts in your inbox every other Monday. No spam, unsubscribe anytime.